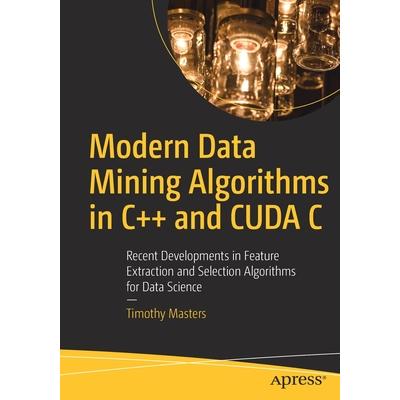

Modern Data Mining Algorithms in C++ and Cuda C

內容簡介

Discover a variety of data-mining algorithms that are useful for selecting small sets of important features from among unwieldy masses of candidates, or extracting useful features from measured variables.

As a serious data miner you will often be faced with thousands of candidate features for your prediction or classification application, with most of the features being of little or no value. You'll know that many of these features may be useful only in combination with certain other features while being practically worthless alone or in combination with most others. Some features may have enormous predictive power, but only within a small, specialized area of the feature space. The problems that plague modern data miners are endless. This book helps you solve this problem by presenting modern feature selection techniques and the code to implement them. Some of these techniques are: Forward selection component analysis Local feature selectionLinking features and a target with a hidden Markov modelImprovements on traditional stepwise selectionNominal-to-ordinal conversion

All algorithms are intuitively justified and supported by the relevant equations and explanatory material. The author also presents and explains complete, highly commented source code.

The example code is in C++ and CUDA C but Python or other code can be substituted; the algorithm is important, not the code that's used to write it.

What You Will Learn Combine principal component analysis with forward and backward stepwise selection to identify a compact subset of a large collection of variables that captures the maximum possible variation within the entire set. Identify features that may have predictive power over only a small subset of the feature domain. Such features can be profitably used by modern predictive models but may be missed by other feature selection methods. Find an underlying hidden Markov model that controls the distributions of feature variables and the target simultaneously. The memory inherent in this method is especially valuable in high-noise applications such as prediction of financial markets.Improve traditional stepwise selection in three ways: examine a collection of 'best-so-far' feature sets; test candidate features for inclusion with cross validation to automatically and effectively limit model complexity; and at each step estimate the probability that our results so far could be just the product of random good luck. We also estimate the probability that the improvement obtained by adding a new variable could have been just good luck. Take a potentially valuable nominal variable (a category or class membership) that is unsuitable for input to a prediction model, and assign to each category a sensible numeric value that can be used as a model input.

Who This Book Is For

Intermediate to advanced data science programmers and analysts.

配送方式

-

台灣

- 國內宅配:本島、離島

-

到店取貨:

不限金額免運費

-

海外

- 國際快遞:全球

-

港澳店取:

訂購/退換貨須知

加入金石堂 LINE 官方帳號『完成綁定』,隨時掌握出貨動態:

商品運送說明:

- 本公司所提供的產品配送區域範圍目前僅限台灣本島。注意!收件地址請勿為郵政信箱。

- 商品將由廠商透過貨運或是郵局寄送。消費者訂購之商品若無法送達,經電話或 E-mail無法聯繫逾三天者,本公司將取消該筆訂單,並且全額退款。

- 當廠商出貨後,您會收到E-mail出貨通知,您也可透過【訂單查詢】確認出貨情況。

- 產品顏色可能會因網頁呈現與拍攝關係產生色差,圖片僅供參考,商品依實際供貨樣式為準。

- 如果是大型商品(如:傢俱、床墊、家電、運動器材等)及需安裝商品,請依商品頁面說明為主。訂單完成收款確認後,出貨廠商將會和您聯繫確認相關配送等細節。

- 偏遠地區、樓層費及其它加價費用,皆由廠商於約定配送時一併告知,廠商將保留出貨與否的權利。

提醒您!!

金石堂及銀行均不會請您操作ATM! 如接獲電話要求您前往ATM提款機,請不要聽從指示,以免受騙上當!

退換貨須知:

**提醒您,鑑賞期不等於試用期,退回商品須為全新狀態**

-

依據「消費者保護法」第19條及行政院消費者保護處公告之「通訊交易解除權合理例外情事適用準則」,以下商品購買後,除商品本身有瑕疵外,將不提供7天的猶豫期:

- 易於腐敗、保存期限較短或解約時即將逾期。(如:生鮮食品)

- 依消費者要求所為之客製化給付。(客製化商品)

- 報紙、期刊或雜誌。(含MOOK、外文雜誌)

- 經消費者拆封之影音商品或電腦軟體。

- 非以有形媒介提供之數位內容或一經提供即為完成之線上服務,經消費者事先同意始提供。(如:電子書、電子雜誌、下載版軟體、虛擬商品…等)

- 已拆封之個人衛生用品。(如:內衣褲、刮鬍刀、除毛刀…等)

- 若非上列種類商品,均享有到貨7天的猶豫期(含例假日)。

- 辦理退換貨時,商品(組合商品恕無法接受單獨退貨)必須是您收到商品時的原始狀態(包含商品本體、配件、贈品、保證書、所有附隨資料文件及原廠內外包裝…等),請勿直接使用原廠包裝寄送,或於原廠包裝上黏貼紙張或書寫文字。

- 退回商品若無法回復原狀,將請您負擔回復原狀所需費用,嚴重時將影響您的退貨權益。

商品評價