iPhone 8

This book is the best user manual you need to guide you on how to use and optimally maximize your iPhone. Millions of people all over the world are iPhone users! Simply because iPhone cell phone is a hugely popular smartphone that offers many advances and convenient features, including a camera like no other, Siri, turn-by-turn driving directions, a calendar, and a lot more. But if you're acquiring the iPhone 8, and iPhone 8 Plus, for the first time, or you probably need more information on how to use your device optimally, and that is why this book is your best companion. The easy-to-follow steps in this book would help you manage, personalize, and communicate better using your new iPhone 8, and iPhone 8 Plus cell phone optimally. In this book, you would learn; - iPhone 8 correct set-up process- iPhone 8 Plus Features- How to personalize your iPhone- How to fix common iPhone 8 problems- 23 Top iPhone Tips and Tricks- iPhone 8 Series Security Features- Apple ID and Face ID Set-up and Tricks- Apple Face ID Hidden Features- All iPhone 8 Gestures you should know- How to Hide SMS notification content display on iPhone screen- How to use the virtual Home button...and a lot more.There's no better resource around for dummies and seniors such as kids, teens, adolescents, adults, like this guide. It's a must-have manual that every iphone user must-own and also be gifted to friends and family. It is the complete guide for you, as you would get simplified follow-through instructions on every possible thing you should know about iPhone 8, and iPhone 8 Plus, how you can customize the iPhone as well as amazing Tips & tricks you never would find in the original iPhone manual.

The Model Thinker

Work with data like a pro using this guide that breaks down how to organize, apply, and most importantly, understand what you are analyzing in order to become a true data ninja. From the stock market to genomics laboratories, census figures to marketing email blasts, we are awash with data. But as anyone who has ever opened up a spreadsheet packed with seemingly infinite lines of data knows, numbers aren't enough: we need to know how to make those numbers talk. In The Model Thinker, social scientist Scott E. Page shows us the mathematical, statistical, and computational models--from linear regression to random walks and far beyond--that can turn anyone into a genius. At the core of the book is Page's "many-model paradigm," which shows the reader how to apply multiple models to organize the data, leading to wiser choices, more accurate predictions, and more robust designs. The Model Thinker provides a toolkit for business people, students, scientists, pollsters, and bloggers to make them better, clearer thinkers, able to leverage data and information to their advantage.

Granular Video Computing

This volume links the concept of granular computing using deep learning and the Internet of Things to object tracking for video analysis. It describes how uncertainties, involved in the task of video processing, could be handled in rough set theoretic granular computing frameworks. Issues such as object tracking from videos in constrained situations, occlusion/overlapping handling, measuring of the reliability of tracking methods, object recognition and linguistic interpretation in video scenes, and event prediction from videos, are the addressed in this volume. The book also looks at ways to reduce data dependency in the context of unsupervised (without manual interaction/ labeled data/ prior information) training.This book may be used both as a textbook and reference book for graduate students and researchers in computer science, electrical engineering, system science, data science, and information technology, and is recommended for both students and practitioners working in computer vision, machine learning, video analytics, image analytics, artificial intelligence, system design, rough set theory, granular computing, and soft computing.

Industrial Iot Technologies and Applications

This book constitutes the thoroughly refereed post-conference proceedings of the 4th International Conference on Industrial IoT Technologies and Applications, IoT 2020, held in December 2020. Due to Covid-19 pandemic the conference was held virtually. The widespread deployment of wireless sensor networks, clouds, industrial robot, embedded computing and inexpensive sensors has facilitated industrial Internet of Things (IndustrialIoT) technologies and fostered some emerging applications. The 14 carefully reviewed papers are a selection from 28 submissions and detail topics in the context of IoT for a smarter industry.

Modeling and Control of Drug Delivery Systems

Modeling and Control of Drug Delivery Systems provides comprehensive coverage of various drug delivery and targeting systems and their state-of-the-art related works, ranging from theory to real-world deployment and future perspectives. Various drug delivery and targeting systems have been developed to minimize drug degradation and adverse effect and increase drug bioavailability. Site-specific drug delivery may be either an active and/or passive process. Improving delivery techniques that minimize toxicity and increase efficacy offer significant potential benefits to patients and open up new markets for pharmaceutical companies. This book will attract many researchers working in DDS field as it provides an essential source of information for pharmaceutical scientists and pharmacologists working in academia as well as in the industry. In addition, it has useful information for pharmaceutical physicians and scientists in many disciplines involved in developing DDS, such as chemical engineering, biomedical engineering, protein engineering, gene therapy.

Data Science Crash Course for Beginners with Python

Data Science Crash Course for Beginners with PythonData Science is here to stay. The tremendous growth in the volume, velocity, and variety of data has a substantial impact on every aspect of a business. While data continues to grow exponentially, accuracy remains a problem. This is where data scientists play a decisive role. A data scientist analyzes data, discovers new insights, paints a picture, and creates a vision. And a competent data scientist will provide a business with the competitive edge it needs and address pressing business problems. Data Science Crash Course for Beginners with Python presents you with a hands-on approach to learn data science fast.How Is This Book Different?Every book by AI Publishing has been carefully crafted. This book lays equal emphasis on the theoretical sections as well as the practical aspects of data science. Each chapter provides the theoretical background behind the numerous data science techniques, and practical examples explain the working of these techniques. In the Further Reading section of each chapter, you will find the links to informative data science posts. This book presents you with the tools and packages you need to kick-start data science projects to resolve problems of practical nature. Special emphasis is laid on the main stages of a data science pipeline-data acquisition, data preparation, exploratory data analysis, data modeling and evaluation, and interpretation of the results. In the Data Science Resources section, links to data science resources, articles, interviews, and data science newsletters are provided. The author has also put together a list of contests and competitions that you can try on your own. Another added benefit of buying this book is you get instant access to all the learning material presented with this book- PDFs, Python codes, exercises, and references-on the publisher's website. They will not cost you an extra cent. The datasets used in this book can be downloaded at runtime, or accessed via the Resources/Datasets folder. The author simplifies your learning by holding your hand through everything. The step by step description of the installation of the software you need for implementing the various data science techniques in this book is guaranteed to make your learning easier. So, right from the beginning, you can experiment with the practical aspects of data science. You'll also find the quick course on Python programming in the second and third chapters immensely helpful, especially if you are new to Python. This book gives you access to all the codes and datasets. So, access to a computer with the internet is sufficient to get started.The topics covered include: Introduction to Data Science and Decision MakingPython Installation and Libraries for Data ScienceReview of Python for Data ScienceData AcquisitionData Preparation (Preprocessing)Exploratory Data AnalysisData Modeling and Evaluation Using Machine LearningInterpretation and Reporting of FindingsData Science ProjectsKey Insights and Further AvenuesClick the BUY button to start your Data Science journey.

Python Machine Learning for Beginners

Python Machine Learning for BeginnersMachine Learning (ML) and Artificial Intelligence (AI) are here to stay. Yes, that's right. Based on a significant amount of data and evidence, it's obvious that ML and AI are here to stay.Consider any industry today. The practical applications of ML are really driving business results. Whether it's healthcare, e-commerce, government, transportation, social media sites, financial services, manufacturing, oil and gas, marketing and salesYou name it. The list goes on. There's no doubt that ML is going to play a decisive role in every domain in the future.But what does a Machine Learning professional do?A Machine Learning specialist develops intelligent algorithms that learn from data and also adapt to the data quickly. Then, these high-end algorithms make accurate predictions.Python Machine Learning for Beginners presents you with a hands-on approach to learn ML fast.How Is This Book Different?AI Publishing strongly believes in learning by doing methodology. With this in mind, we have crafted this book with care. You will find that the emphasis on the theoretical aspects of machine learning is equal to the emphasis on the practical aspects of the subject matter.You'll learn about data analysis and visualization in great detail in the first half of the book. Then, in the second half, you'll learn about machine learning and statistical models for data science.Each chapter presents you with the theoretical framework behind the different data science and machine learning techniques, and practical examples illustrate the working of these techniques.When you buy this book, your learning journey becomes so much easier. The reason is you get instant access to all the related learning material presented with this book-references, PDFs, Python codes, and exercises-on the publisher's website. All this material is available to you at no extra cost. You can download the ML datasets used in this book at runtime, or you can access them via the Resources/Datasets folder.You'll also find the short course on Python programming in the second chapter immensely useful, especially if you are new to Python. Since this book gives you access to all the Python codes and datasets, you only need access to a computer with the internet to get started.The topics covered include: Introduction and Environment SetupPython Crash CoursePython NumPy Library for Data AnalysisIntroduction to Pandas Library for Data AnalysisData Visualization via Matplotlib, Seaborn, and Pandas LibrariesSolving Regression Problems in ML Using Sklearn LibrarySolving Classification Problems in ML Using Sklearn LibraryData Clustering with ML Using Sklearn LibraryDeep Learning with Python TensorFlow 2.0Dimensionality Reduction with PCA and LDA Using SklearnClick the BUY NOW button to start your Machine Learning journey.

Machine Learning, Big Data, and Iot for Medical Informatics

Machine Learning, Big Data, and IoT for Medical Informatics focuses on the latest techniques adopted in the field of medical informatics. In medical informatics, machine learning, big data, and IOT-based techniques play a significant role in disease diagnosis and its prediction. In the medical field, the structure of data is equally important for accurate predictive analytics due to heterogeneity of data such as ECG data, X-ray data, and image data. Thus, this book focuses on the usability of machine learning, big data, and IOT-based techniques in handling structured and unstructured data. It also emphasizes on the privacy preservation techniques of medical data. This volume can be used as a reference book for scientists, researchers, practitioners, and academicians working in the field of intelligent medical informatics. In addition, it can also be used as a reference book for both undergraduate and graduate courses such as medical informatics, machine learning, big data, and IoT.

Data Modeling for Quality

This book is for all data modelers, data architects, and database designers―be they novices who want to learn what's involved in data modeling, or experienced modelers who want to brush up their skills. A novice will not only gain an overview of data modeling, they will also learn how to follow the data modeling process, including the activities required for each step. The experienced practitioner will discover (or rediscover) techniques to ensure that data models accurately reflect business requirements. This book describes rigorous yet easily implemented approaches to: modeling of business information requirements for review by business stakeholders before development of the logical data modelnormalizing data, based on simple questions rather than the formal definitions which many modelers find intimidatingnaming and defining concepts and attributesmodeling of time-variant datadocumenting business rules governing both the real world and datadata modeling in an Agile projectmanaging data model change in any type of project transforming a business information model to a logical data model against which developers can codeimplementing the logical data model in a traditional relational DBMS, an SQL:2003-compliant DBMS, an object-relational DBMS, or in XML.Part 1 describes business information models in-depth, including: the importance of modeling business information requirements before embarking on a logical data modelbusiness concepts (entity classes)attributes of business conceptsattribute classes as an alternative to DBMS data typesrelationships between business conceptstime-variant datageneralization and specialization of business conceptsnaming and defining the components of the business information modelbusiness rules governing data, including a distinction between real-world rules and data rules.Part 2 journeys from requirements to a working data resource, covering: sourcing data requirementsdeveloping the business information modelcommunicating it to business stakeholders for review, both as diagrams and verballymanaging data model changetransforming the business information model into a logical data model of stored data for implementation in a relational or object-relational DBMSattribute value representation and data constraints (important but often overlooked)modeling data vault, dimensional and XML data.

Python Data Analysis - Third Edition

Understand data analysis pipelines using machine learning algorithms and techniques with this practical guideKey Features: Prepare and clean your data to use it for exploratory analysis, data manipulation, and data wranglingDiscover supervised, unsupervised, probabilistic, and Bayesian machine learning methodsGet to grips with graph processing and sentiment analysisBook Description: Data analysis enables you to generate value from small and big data by discovering new patterns and trends, and Python is one of the most popular tools for analyzing a wide variety of data. With this book, you'll get up and running using Python for data analysis by exploring the different phases and methodologies used in data analysis and learning how to use modern libraries from the Python ecosystem to create efficient data pipelines.Starting with the essential statistical and data analysis fundamentals using Python, you'll perform complex data analysis and modeling, data manipulation, data cleaning, and data visualization using easy-to-follow examples. You'll then understand how to conduct time series analysis and signal processing using ARMA models. As you advance, you'll get to grips with smart processing and data analytics using machine learning algorithms such as regression, classification, Principal Component Analysis (PCA), and clustering. In the concluding chapters, you'll work on real-world examples to analyze textual and image data using natural language processing (NLP) and image analytics techniques, respectively. Finally, the book will demonstrate parallel computing using Dask.By the end of this data analysis book, you'll be equipped with the skills you need to prepare data for analysis and create meaningful data visualizations for forecasting values from data.What You Will Learn: Explore data science and its various process modelsPerform data manipulation using NumPy and pandas for aggregating, cleaning, and handling missing valuesCreate interactive visualizations using Matplotlib, Seaborn, and BokehRetrieve, process, and store data in a wide range of formatsUnderstand data preprocessing and feature engineering using pandas and scikit-learnPerform time series analysis and signal processing using sunspot cycle dataAnalyze textual data and image data to perform advanced analysisGet up to speed with parallel computing using DaskWho this book is for: This book is for data analysts, business analysts, statisticians, and data scientists looking to learn how to use Python for data analysis. Students and academic faculties will also find this book useful for learning and teaching Python data analysis using a hands-on approach. A basic understanding of math and working knowledge of the Python programming language will help you get started with this book.

Smoke - An Android Echo Chat Software Application

Smoke is a Personal Chat Messenger - an Android Echo Software Application which is known as worldwide the first mobile McEliece Messenger (McEliece, Fujisaka and Pointcheval). This Volume I is about the Chat Client Smoke. Volume II of the same author is about the referring SmokeStack Chat Server. This Open Source Technical Website Reference Documentation on paper addresses to students, teachers, and developers to create a Personal Chat Messenger based on Java for learning and teaching purposes. The book introduces into TCP over Echo (TCPE), Cryptographic Discovery, Fiasco Forwarding Keys, an Argon2id key-derivation function, the Steam file transfer protocol and the Juggling Juggernaut Protocol for Juggernaut Keys and further topics.

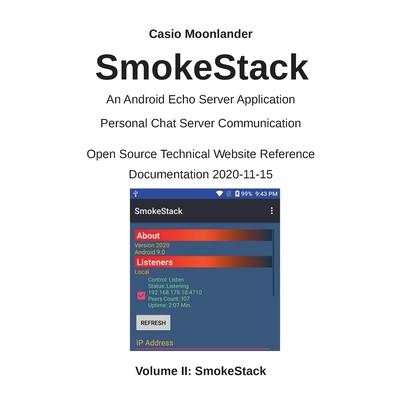

SmokeStack - An Android Echo Chat Server Application

SmokeStack is a Personal Chat Server - an Android Echo Server Application for the Chat Messenger Smoke which is known as worldwide the first mobile McEliece Messenger (McEliece, Fujisaka and Pointcheval). This Volume II is about the Chat Server SmokeStack. Volume I of the same author is about the referring Smoke Chat Messenger Client. This Open Source Technical Website Reference Documentation on paper addresses to students, teachers, and developers to create a Personal Chat Server on Android based on Java for learning and teaching purposes. The book introduces into TCP over Echo (TCPE), Cryptographic Discovery, Fiasco Forwarding Keys, an Argon2id key-derivation function, the Steam file transfer protocol and the Juggling Juggernaut Protocol for Juggernaut Keys, mobile Server Technologies, Ozone Postboxes and further topics.

Supercomputing

This book constitutes the refereed post-conference proceedings of the 6th Russian Supercomputing Days, RuSCDays 2020, held in Moscow, Russia, in September 2020.* The 51 revised full and 4 revised short papers presented were carefully reviewed and selected from 106 submissions. The papers are organized in the following topical sections: parallel algorithms; supercomputer simulation; HPC, BigData, AI: architectures, technologies, tools; and distributed and cloud computing. * The conference was held virtually due to the COVID-19 pandemic.

Knowledge-Based Explorable Extended Reality Environments

This book presents explorable XR environments--their rationale, concept, architectures as well as methods and tools for spatial-temporal composition based on domain knowledge, including geometrical, presentational, structural and behavioral elements. Explorable XR environments enable monitoring, analyzing, comprehending, examining and controlling users' and objects' behavior and features as well as users' skills, experience, interests and preferences.The E-XR approach proposed in this book relies on two main pillars. The first is knowledge representation technologies, such as logic programming, description logics and the semantic web, which permit automated reasoning and queries. The second is imperative programming languages, which are a prevalent solution for building XR environments. Potential applications of E-XR are in a variety of domains, e.g., education, training, medicine, design, tourism, marketing, merchandising, engineering and entertainment.The book's readers will understand the emerging domain of explorable XR environments with their possible applications. Special attention is given to an in-depth discussion of the field with taxonomy and classification of the available related solutions. Examples and design patterns of knowledge-based composition and exploration of XR behavior are provided, and an extensive evaluation and analysis of the proposed approach is included. This book helps researchers in XR systems, 3D modeling tools and game engines as well as lecturers and students who search for clearly presented information supported by use cases. For XR and game programmers as well as graphic designers, the book is a valuable source of information and examples in XR development. Professional software and web developers may find the book interesting as the proposed ideas are illustrated by rich examples demonstrating design patterns and guidelines in object-oriented, procedural and declarative programming.

L'apprentissage automatique en action

Cherchez-vous un livre d'apprentissage 矇l矇mentaire pour vous familiariser ? l`apprentissage automatique ? Mon livre vous expliquera les concepts de base de fa癟on simple et compr矇hensible. Une fois que vous l'aurez lu, vous aurez une connaissance robuste des principes de base qui vous permettront de passer plus facilement ? un livre de niveau plus avanc矇 si vous souhaitez en conna簾tre davantage.

Development of Clinical Decision Support Systems Using Bayesian Networks

For the development of clinical decision support systems based on Bayesian networks, Mario A. Cypko investigates comprehensive expert models of multidisciplinary clinical treatment decisions and solves challenges in their modeling. The presented methods, models and tools are developed in close and intensive cooperation between knowledge engineers and clinicians. In the course of this study, laryngeal cancer serves as an exemplary treatment decision. The reader is guided through a development process and new opportunities for research and development are opened up: in modeling and validation of workflows, guided modeling, semi-automated modeling, advanced Bayesian networks, model-user interaction, inter-institutional modeling and quality management.

Big Data

Big Data: A Tutorial-Based Approach explores the tools and techniques used to bring about the marriage of structured and unstructured data. It focuses on Hadoop Distributed Storage and MapReduce Processing by implementing (i) Tools and Techniques of Hadoop Eco System, (ii) Hadoop Distributed File System Infrastructure, and (iii) efficient MapReduce processing. The book includes Use Cases and Tutorials to provide an integrated approach that answers the 'What', 'How', and 'Why' of Big Data.Features Identifies the primary drivers of Big Data Walks readers through the theory, methods and technology of Big Data Explains how to handle the 4 V's of Big Data in order to extract value for better business decision making Shows how and why data connectors are critical and necessary for Agile text analytics Includes in-depth tutorials to perform necessary set-ups, installation, configuration and execution of important tasks Explains the command line as well as GUI interface to a powerful data exchange tool between Hadoop and legacy r-dbms databases

Distributed Artificial Intelligence

This book constitutes the refereed proceedings of the Second International Conference on Distributed Artificial Intelligence, DAI 2020, held in Nanjing, China, in October 2020. The 9 full papers presented in this book were carefully reviewed and selected from 22 submissions. DAI aims at bringing together international researchers and practitioners in related areas including general AI, multiagent systems, distributed learning, computational game theory, etc., to provide a single, high-profile, internationally renowned forum for research in the theory and practice of distributed AI. Due to the Corona pandemic this event was held virtually.

Value Flows into SAP Margin Analysis (CO-PA) in S/4HANA

This practical manual guides you step by step through the flows of actual values into SAP Profitability Analysis (CO-PA) and the forms these flows take in S/4HANA, including the account-based CO-PA required there. The book presents the technical prerequisites and changes that SAP S/4HANA brings compared to the previous product, ERP, and discusses whether there is any truth in rumors such as "The Controlling module will no longer exist." Using a simple, continuous example, the authors, who have many years of experience with SAP Controlling, illustrate how an SAP value flow progresses through the individual stages of the process: from a sales order, through production, right up to the issue of goods and invoicing. The book shows where you will find these values at each respective point in time in Financials (FI) and Controlling (CO). The authors explain both the business and the SAP technical view in detail and highlight the benefits of the innovative approach under S/4HANA, now known under the new name of "SAP Margin Analysis." Furthermore, the book delivers a plea for CO-PA to be used as a tool for sales management - a tool that allows the widest possible variety of business analyses.Value flows based on the logistical sales and production processComparison of costing-based and account-based CO-PAPresentation of the changes in the value flow compared to SAP ERPContinuous numerical example right up to closing activitie

Communication Technologies for Vehicles

This book constitutes the refereed proceedings of the 15th International Workshop on Communication Technologies for Vehicles, Nets4Cars/Nets4Trains/Nets4Aircraft 2020, held in Bordeaux, France, in November 2020. The 18 full papers were carefully reviewed and selected from 22 submissions. The selected papers present orig-inal research results in areas related to the physical layer, communication protocols and standards, mobility and traffic models, experimental and field operational testing, and performance analysis.

Essential Statistics for Non-STEM Data Analysts

Reinforce your understanding of data science and data analysis from a statistical perspective to extract meaningful insights from your data using Python programmingKey featuresWork your way through the entire data analysis pipeline with statistics concerns in mind to make reasonable decisionsUnderstand how various data science algorithms functionBuild a solid foundation in statistics for data science and machine learning using Python-based examplesBook DescriptionStatistics remain the backbone of modern analysis tasks, helping you to interpret the results produced by data science pipelines. This book is a detailed guide covering the math and various statistical methods required for undertaking data science tasks.The book starts by showing you how to preprocess data and inspect distributions and correlations from a statistical perspective. You'll then get to grips with the fundamentals of statistical analysis and apply its concepts to real-world datasets. As you advance, you'll find out how statistical concepts emerge from different stages of data science pipelines, understand the summary of datasets in the language of statistics, and use it to build a solid foundation for robust data products such as explanatory models and predictive models. Once you've uncovered the working mechanism of data science algorithms, you'll cover essential concepts for efficient data collection, cleaning, mining, visualization, and analysis. Finally, you'll implement statistical methods in key machine learning tasks such as classification, regression, tree-based methods, and ensemble learning.By the end of this Essential Statistics for Non-STEM Data Analysts book, you'll have learned how to build and present a self-contained, statistics-backed data product to meet your business goals.What you will learnFind out how to grab and load data into an analysis environmentPerform descriptive analysis to extract meaningful summaries from dataDiscover probability, parameter estimation, hypothesis tests, and experiment design best practicesGet to grips with resampling and bootstrapping in PythonDelve into statistical tests with variance analysis, time series analysis, and A/B test examplesUnderstand the statistics behind popular machine learning algorithmsAnswer questions on statistics for data scientist interviewsWho this book is forThis book is an entry-level guide for data science enthusiasts, data analysts, and anyone starting out in the field of data science and looking to learn the essential statistical concepts with the help of simple explanations and examples. If you're a developer or student with a non-mathematical background, you'll find this book useful. Working knowledge of the Python programming language is required.

GIS Guidebook

The new Task Items in ArcGIS Pro allow you to develop a step-by-step script that guides users through a workflow or process. This book is designed to teach you how to document, streamline, and develop the processes that step through your workflows using Tasks. The book contains discussions of the topics associated with tasks, and examples of how they are used. Each section also has descriptions of the building process and parameter settings for these items so that you will not only understand the examples shown here but be able to transfer that knowledge to your own workflows and processes. After each of the discussion sections there are exercises to work through that will provide some hands-on experience with the features and settings, including tips and tricks for their best use. At the end of the book are two project suggestions that you can complete on your own, either with the provided data or using your own data sets.

Semantic Systems. in the Era of Knowledge Graphs

This open access book constitutes the refereed proceedings of the 16th International Conference on Semantic Systems, SEMANTiCS 2020, held in Amsterdam, The Netherlands, in September 2020. The conference was held virtually due to the COVID-19 pandemic.

Artificial Intelligence and Soft Computing

The two-volume set LNCS 12415 and 12416 constitutes the refereed proceedings of of the 19th International Conference on Artificial Intelligence and Soft Computing, ICAISC 2020, held in Zakopane, Poland*, in October 2020.The 112 revised full papers presented were carefully reviewed and selected from 265 submissions. The papers included in the first volume are organized in the following six parts: ​neural networks and their applications; fuzzy systems and their applications; evolutionary algorithms and their applications; pattern classification; bioinformatics, biometrics and medical applications; artificial intelligence in modeling and simulation.The papers included in the second volume are organized in the following four parts: computer vision, image and speech analysis; data mining; various problems of artificial intelligence; agent systems, robotics and control.*The conference was held virtually due to the COVID-19 pandemic.

Data Engineering with Python

Build, monitor, and manage real-time data pipelines to create data engineering infrastructure efficiently using open-source Apache projectsKey features: Become well-versed in data architectures, data preparation, and data optimization skills with the help of practical examplesDesign data models and learn how to extract, transform, and load (ETL) data using PythonSchedule, automate, and monitor complex data pipelines in productionBook DescriptionData engineering provides the foundation for data science and analytics, and forms an important part of all businesses. This book will help you to explore various tools and methods that are used for understanding the data engineering process using Python.The book will show you how to tackle challenges commonly faced in different aspects of data engineering. You'll start with an introduction to the basics of data engineering, along with the technologies and frameworks required to build data pipelines to work with large datasets. You'll learn how to transform and clean data and perform analytics to get the most out of your data. As you advance, you'll discover how to work with big data of varying complexity and production databases, and build data pipelines. Using real-world examples, you'll build architectures on which you'll learn how to deploy data pipelines.By the end of this Python book, you'll have gained a clear understanding of data modeling techniques, and will be able to confidently build data engineering pipelines for tracking data, running quality checks, and making necessary changes in production.What you will learnUnderstand how data engineering supports data science workflowsDiscover how to extract data from files and databases and then clean, transform, and enrich itConfigure processors for handling different file formats as well as both relational and NoSQL databasesFind out how to implement a data pipeline and dashboard to visualize resultsUse staging and validation to check data before landing in the warehouseBuild real-time pipelines with staging areas that perform validation and handle failuresGet to grips with deploying pipelines in the production environmentWho this book is forThis book is for data analysts, ETL developers, and anyone looking to get started with or transition to the field of data engineering or refresh their knowledge of data engineering using Python. This book will also be useful for students planning to build a career in data engineering or IT professionals preparing for a transition. No previous knowledge of data engineering is required.

Tools and Algorithms for the Construction and Analysis of Systems

This book is Open Access under a CC BY licence. This book, LNCS 11429, is part III of the proceedings of the 25th International Conference on Tools and Algorithms for the Construction and Analysis of Systems, TACAS 2019, which took place in Prague, Czech Republic, in April 2019, held as part of the European Joint Conferences on Theory and Practice of Software, ETAPS 2019. It's a special volume on the occasion of the 25 year anniversary of TACAS. This work was published by Saint Philip Street Press pursuant to a Creative Commons license permitting commercial use. All rights not granted by the work's license are retained by the author or authors.

Tools and Algorithms for the Construction and Analysis of Systems

This book is Open Access under a CC BY licence. This book, LNCS 11429, is part III of the proceedings of the 25th International Conference on Tools and Algorithms for the Construction and Analysis of Systems, TACAS 2019, which took place in Prague, Czech Republic, in April 2019, held as part of the European Joint Conferences on Theory and Practice of Software, ETAPS 2019. It's a special volume on the occasion of the 25 year anniversary of TACAS. This work was published by Saint Philip Street Press pursuant to a Creative Commons license permitting commercial use. All rights not granted by the work's license are retained by the author or authors.

6gn for Future Wireless Networks

This book constitutes the proceedings of the Third International Conference on 6G for Future Wireless Networks, 6GN 2020, held in Tianjin, China, in August 2020. The conference was held virtually due to the COVID-19 pandemic. The 45 full papers were selected from 109 submissions and present the state of the art and practical applications of 6G technologies. The papers are arranged thematically on network scheduling and optimization; wireless system and platform; intelligent applications; network performance evaluation; cyber security and privacy; technologies for private 5G/6G.

Implementing Azure DevOps Solutions

A comprehensive guide to becoming a skilled Azure DevOps engineerKey Features Explore a step-by-step approach to designing and creating a successful DevOps environment Understand how to implement continuous integration and continuous deployment pipelines on Azure Integrate and implement security, compliance, containers, and databases in your DevOps strategies Book Description Implementing Azure DevOps Solutions helps DevOps engineers and administrators to leverage Azure DevOps Services to master practices such as continuous integration and continuous delivery (CI/CD), containerization, and zero downtime deployments. This book starts with the basics of continuous integration, continuous delivery, and automated deployments. You will then learn how to apply configuration management and Infrastructure as Code (IaC) along with managing databases in DevOps scenarios. Next, you will delve into fitting security and compliance with DevOps. As you advance, you will explore how to instrument applications, and gather metrics to understand application usage and user behavior. The latter part of this book will help you implement a container build strategy and manage Azure Kubernetes Services. Lastly, you will understand how to create your own Azure DevOps organization, along with covering quick tips and tricks to confidently apply effective DevOps practices. By the end of this book, you'll have gained the knowledge you need to ensure seamless application deployments and business continuity. What you will learn Get acquainted with Azure DevOps Services and DevOps practices Implement CI/CD processes Build and deploy a CI/CD pipeline with automated testing on Azure Integrate security and compliance in pipelines Understand and implement Azure Container Services Become well versed in closing the loop from production back to development Who this book is for This DevOps book is for software developers and operations specialists interested in implementing DevOps practices for the Azure cloud. Application developers and IT professionals with some experience in software development and development practices will also find this book useful. Some familiarity with Azure DevOps basics is an added advantage. Professionals preparing for the Exam AZ-400: Designing and Implementing Microsoft DevOps Solutions certification will also find this book useful.

Blockchain Data Analytics for Dummies

Get ahead of the curve--learn about big data on the blockchain Blockchain came to prominence as the disruptive technology that made cryptocurrencies work. Now, data pros are using blockchain technology for faster real-time analysis, better data security, and more accurate predictions. Blockchain Data Analytics For Dummies is your quick-start guide to harnessing the potential of blockchain. Inside this book, technologists, executives, and data managers will find information and inspiration to adopt blockchain as a big data tool. Blockchain expert Michael G. Solomon shares his insight on what the blockchain is and how this new tech is poised to disrupt data. Set your organization on the cutting edge of analytics, before your competitors get there! Learn how blockchain technologies work and how they can integrate with big data Discover the power and potential of blockchain analytics Establish data models and quickly mine for insights and results Create data visualizations from blockchain analysis Discover how blockchains are disrupting the data world with this exciting title in the trusted For Dummies line!

Fifty Years of Relational, and Other Database Writings

Fifty years of relational. It's hard to believe the relational model has been around now for over half a century! But it has-it was born on August 19th, 1969, when Codd's first database paper was published. And Chris Date has been involved with it for almost the whole of that time, working closely with Codd for many years and publishing the very first, and definitive, book on the subject in 1975. In this book's title essay, Chris offers his own unique perspective (two chapters) on those fifty years. No database professional can afford to miss this one of a kind history. But there's more to this book than just a little personal history. Another unique feature is an extensive and in depth discussion (nine chapters) of a variety of frequently asked questions on relational matters, covering such topics as mathematics and the relational model; relational algebra; predicates; relation valued attributes; keys and normalization; missing information; and the SQL language. Another part of the book offers detailed responses to critics (four chapters). Finally, the book also contains the text of several recent interviews with Chris Date, covering such matters as RM/V2, XML, NoSQL, The Third Manifesto, and how SQL came to dominate the database landscape. About Chris: Chris Date has a stature that is unique in the database industry. He is best known for his textbook An Introduction to Database Systems (Addison-Wesley), which has sold some 900,000 copies at the time of writing. He enjoys a reputation that is second to none for his ability to explain complex technical issues in a clear and understandable fashion. He was inducted into the Computing Industry Hall of Fame in 2004.

Mastering Palo Alto Networks

Set up next-generation firewalls from Palo Alto Networks and get to grips with configuring and troubleshooting using the PAN-OS platformKey Features Understand how to optimally use PAN-OS features Build firewall solutions to safeguard local, cloud, and mobile networks Protect your infrastructure and users by implementing robust threat prevention solutions Book Description To safeguard against security threats, it is crucial to ensure that your organization is effectively secured across networks, mobile devices, and the cloud. Palo Alto Networks' integrated platform makes it easy to manage network and cloud security along with endpoint protection and a wide range of security services. With this book, you'll understand Palo Alto Networks and learn how to implement essential techniques, right from deploying firewalls through to advanced troubleshooting. The book starts by showing you how to set up and configure the Palo Alto Networks firewall, helping you to understand the technology and appreciate the simple, yet powerful, PAN-OS platform. Once you've explored the web interface and command-line structure, you'll be able to predict expected behavior and troubleshoot anomalies with confidence. You'll learn why and how to create strong security policies and discover how the firewall protects against encrypted threats. In addition to this, you'll get to grips with identifying users and controlling access to your network with user IDs and even prioritize traffic using quality of service (QoS). The book will show you how to enable special modes on the firewall for shared environments and extend security capabilities to smaller locations. By the end of this network security book, you'll be well-versed with advanced troubleshooting techniques and best practices recommended by an experienced security engineer and Palo Alto Networks expert. What you will learn Perform administrative tasks using the web interface and command-line interface (CLI) Explore the core technologies that will help you boost your network security Discover best practices and considerations for configuring security policies Run and interpret troubleshooting and debugging commands Manage firewalls through Panorama to reduce administrative workloads Protect your network from malicious traffic via threat prevention Who this book is for This book is for network engineers, network security analysts, and security professionals who want to understand and deploy Palo Alto Networks in their infrastructure. Anyone looking for in-depth knowledge of Palo Alto Network technologies, including those who currently use Palo Alto Network products, will find this book useful. Intermediate-level network administration knowledge is necessary to get started with this cybersecurity book.

E-Business and Telecommunications

This book contains a compilation of the revised and extended versions of the best papers presented at the 16th International Joint Conference on E-Business and Telecommunications, ICETE 2019, held in Prague, Czech Republic, in July 2019.ICETE is a joint international conference integrating four major areas of knowledge that are divided into six corresponding conferences: International Conference on Data Communication Networking, DCNET; International Conference on E-Business, ICE-B; International Conference on Optical Communication Systems, OPTICS; International Conference on Security and Cryptography, SECRYPT; International Conference on Signal Processing and Multimedia, SIGMAP; International Conference on Wireless Information Systems, WINSYS. The 11 full papers presented in the volume were carefully reviewed and selected from the 166 submissions. The papers cover the following key areas of data communication networking, e-business, security and cryptography, signal processing and multimedia applications.

Building Machine Learning Pipelines

Companies are spending billions on machine learning projects, but it's money wasted if the models can't be deployed effectively. In this practical guide, Hannes Hapke and Catherine Nelson walk you through the steps of automating a machine learning pipeline using the TensorFlow ecosystem. You'll learn the techniques and tools that will cut deployment time from days to minutes, so that you can focus on developing new models rather than maintaining legacy systems. Data scientists, machine learning engineers, and DevOps engineers will discover how to go beyond model development to successfully productize their data science projects, while managers will better understand the role they play in helping to accelerate these projects. Understand the steps to build a machine learning pipeline Build your pipeline using components from TensorFlow Extended Orchestrate your machine learning pipeline with Apache Beam, Apache Airflow, and Kubeflow Pipelines Work with data using TensorFlow Data Validation and TensorFlow Transform Analyze a model in detail using TensorFlow Model Analysis Examine fairness and bias in your model performance Deploy models with TensorFlow Serving or TensorFlow Lite for mobile devices Learn privacy-preserving machine learning techniques

Mastering Kafka Streams and Ksqldb

Working with unbounded and fast-moving data streams has historically been difficult. But with Kafka Streams and ksqlDB, building stream processing applications is easy and fun. This practical guide shows data engineers how to use these tools to build highly scalable stream processing applications for moving, enriching, and transforming large amounts of data in real time. Mitch Seymour, data services engineer at Mailchimp, explains important stream processing concepts against a backdrop of several interesting business problems. You'll learn the strengths of both Kafka Streams and ksqlDB to help you choose the best tool for each unique stream processing project. Non-Java developers will find the ksqlDB path to be an especially gentle introduction to stream processing. Learn the basics of Kafka and the pub/sub communication pattern Build stateless and stateful stream processing applications using Kafka Streams and ksqlDB Perform advanced stateful operations, including windowed joins and aggregations Understand how stateful processing works under the hood Learn about ksqlDB's data integration features, powered by Kafka Connect Work with different types of collections in ksqlDB and perform push and pull queries Deploy your Kafka Streams and ksqlDB applications to production

Foundations of Data Intensive Applications

There is an ever increasing need to store this data, process them and incorporate the knowledge into everyday business operations of the companies. Before big data systems. there were high performance systems designed to do large calculations. Around the time big data became popular, high performance computing systems were mature enough to support the scientific community. But they weren't ready for the enterprise needs of data analytics. Because of the lack of system support for big data systems at that time, there was a large number of systems created to store and process data. These systems were created according to different design principles and some of them thrived through the years while some didn't succeed. Because of the diverse nature of systems and tools available for data analytics, there is a need to understand these systems and their applications from a theoretical perspective. These systems are masking the user from underlying details, and they use them without knowing how they work. This works for simple applications but when developing more complex applications that need to scale, users find themselves without the required foundational knowledge to reason about the issues. This knowledge is currently hidden in the systems and research papers. The underlying principles behind data processing systems originate from the parallel and distributed computing paradigms. Among the many systems and APIs for data processing, they use the same fundamental ideas under the hood with slightly different variations. We can breakdown data analytics systems according to these principles and study them to understand the inner workings of applications. This book defines these foundational components of large scale, distributed data processing systems and go into details independently of specific frameworks. It draws examples of current systems to explain how these principles are used in practice. Major design decisions around these foundational components define the performance, type of applications supported and usability. One of the goals of the book is to explain these differences so that readers can take informed decisions when developing applications. Further it will help readers to acquire in-depth knowledge and recognize problems in their applications such as performance issues, distributed operation issues, and fault tolerance aspects. This book aims to use state of the art research when appropriate to discuss some ideas and future of data analytics tools.

DAX Patterns

A pattern is a general, reusable solution to a frequent or common challenge. This book is the second edition of the most comprehensive collection of ready-to-use solutions in DAX, that you can use in Microsoft Power BI, Analysis Services Tabular, and Power Pivot for Excel.The book includes the following patterns: Time-related calculations, Standard time-related calculations, Month-related calculations, Week-related calculations, Custom time-related calculations, Comparing different time periods, Semi-additive calculations, Cumulative total, Parameter table, Static segmentation, Dynamic segmentation, ABC classification, New and returning customers, Related distinct count, Events in progress, Ranking, Hierarchies, Parent-child hierarchies, Like-for-like comparison, Transition matrix, Survey, Basket analysis, Currency conversion, Budget.

Deep Learning for Coders with Fastai and Pytorch

Deep learning is often viewed as the exclusive domain of math PhDs and big tech companies. But as this hands-on guide demonstrates, programmers comfortable with Python can achieve impressive results in deep learning with little math background, small amounts of data, and minimal code. How? With fastai, the first library to provide a consistent interface to the most frequently used deep learning applications. Authors Jeremy Howard and Sylvain Gugger, the creators of fastai, show you how to train a model on a wide range of tasks using fastai and PyTorch. You'll also dive progressively further into deep learning theory to gain a complete understanding of the algorithms behind the scenes. Train models in computer vision, natural language processing, tabular data, and collaborative filtering Learn the latest deep learning techniques that matter most in practice Improve accuracy, speed, and reliability by understanding how deep learning models work Discover how to turn your models into web applications Implement deep learning algorithms from scratch Consider the ethical implications of your work Gain insight from the foreword by PyTorch cofounder, Soumith Chintala

Hands-On Simulation Modeling with Python

Enhance your simulation modeling skills by creating and analyzing digital prototypes of a physical model using Python programming with this comprehensive guideKey Features Learn to create a digital prototype of a real model using hands-on examples Evaluate the performance and output of your prototype using simulation modeling techniques Understand various statistical and physical simulations to improve systems using Python Book Description Simulation modeling helps you to create digital prototypes of physical models to analyze how they work and predict their performance in the real world. With this comprehensive guide, you'll understand various computational statistical simulations using Python. Starting with the fundamentals of simulation modeling, you'll understand concepts such as randomness and explore data generating processes, resampling methods, and bootstrapping techniques. You'll then cover key algorithms such as Monte Carlo simulations and Markov decision processes, which are used to develop numerical simulation models, and discover how they can be used to solve real-world problems. As you advance, you'll develop simulation models to help you get accurate results and enhance decision-making processes. Using optimization techniques, you'll learn to modify the performance of a model to improve results and make optimal use of resources. The book will guide you in creating a digital prototype using practical use cases for financial engineering, prototyping project management to improve planning, and simulating physical phenomena using neural networks. By the end of this book, you'll have learned how to construct and deploy simulation models of your own to overcome real-world challenges. What you will learn Gain an overview of the different types of simulation models Get to grips with the concepts of randomness and data generation process Understand how to work with discrete and continuous distributions Work with Monte Carlo simulations to calculate a definite integral Find out how to simulate random walks using Markov chains Obtain robust estimates of confidence intervals and standard errors of population parameters Discover how to use optimization methods in real-life applications Run efficient simulations to analyze real-world systems Who this book is for Hands-On Simulation Modeling with Python is for simulation developers and engineers, model designers, and anyone already familiar with the basic computational methods that are used to study the behavior of systems. This book will help you explore advanced simulation techniques such as Monte Carlo methods, statistical simulations, and much more using Python. Working knowledge of Python programming language is required.

Reversible Computation

This book constitutes the refereed proceedings of the 12th International Conference on Reversible Computation, RC 2020, held in Oslo, Norway, in July 2020. The 17 full papers included in this volume were carefully reviewed and selected from 22 submissions. The papers are organized in the following topical sections: theory and foundation; programming languages; circuit synthesis; evaluation of circuit synthesis; and applications and implementations.

The Shape of Data in Digital Humanities

Data and its technologies now play a large and growing role in humanities research and teaching. This book addresses the needs of humanities scholars who seek deeper expertise in the area of data modeling and representation. The authors, all experts in digital humanities, offer a clear explanation of key technical principles, a grounded discussion of case studies, and an exploration of important theoretical concerns. The book opens with an orientation, giving the reader a history of data modeling in the humanities and a grounding in the technical concepts necessary to understand and engage with the second part of the book. The second part of the book is a wide-ranging exploration of topics central for a deeper understanding of data modeling in digital humanities. Chapters cover data modeling standards and the role they play in shaping digital humanities practice, traditional forms of modeling in the humanities and how they have been transformed by digital approaches, ontologies which seek to anchor meaning in digital humanities resources, and how data models inhabit the other analytical tools used in digital humanities research. It concludes with a glossary chapter that explains specific terms and concepts for data modeling in the digital humanities context. This book is a unique and invaluable resource for teaching and practising data modeling in a digital humanities context.

Reversible Computation: Extending Horizons of Computing

This open access State-of-the-Art Survey presents the main recent scientific outcomes in the area of reversible computation, focusing on those that have emerged during COST Action IC1405 "Reversible Computation - Extending Horizons of Computing", a European research network that operated from May 2015 to April 2019.Reversible computation is a new paradigm that extends the traditional forwards-only mode of computation with the ability to execute in reverse, so that computation can run backwards as easily and naturally as forwards. It aims to deliver novel computing devices and software, and to enhance existing systems by equipping them with reversibility. There are many potential applications of reversible computation, including languages and software tools for reliable and recovery-oriented distributed systems and revolutionary reversible logic gates and circuits, but they can only be realized and have lasting effect if conceptual and firm theoretical foundations are established first.

Artificial Intelligence in Education

This two-volume set LNAI 12163 and 12164 constitutes the refereed proceedings of the 21th International Conference on Artificial Intelligence in Education, AIED 2020, held in Ifrane, Morocco, in July 2020.*The 49 full papers presented together with 66 short, 4 industry & innovation, 4 doctoral consortium, and 4 workshop papers were carefully reviewed and selected from 214 submissions. The conference provides opportunities for the cross-fertilization of approaches, techniques and ideas from the many fields that comprise AIED, including computer science, cognitive and learning sciences, education, game design, psychology, sociology, linguistics as well as many domain-specific areas. ​*The conference was held virtually due to the COVID-19 pandemic.

Microsoft Exchange Server 2016 Administration GuideDeploy, Manage and Administer Microsoft

Discover and work with the new features in Microsoft Exchange Server 2016 Key FeaturesDeploy Exchange 2016 in a new environment or coexisting environment with a legacy version of Exchange. Learn how to migrate your environment from Exchange 2010 or 2013 to Exchange 2016.Get familiar with Failover Cluster Manager as well as creating and managing Database Availability Groups (DAG). Learn how to migrate unified messaging using Microsoft's guidelines. Description This book is a handy guide on how you can use the features of Microsoft Exchange Server 2016. It begins with sharing the new features of Exchange 2016 and compares it with the previous versions. This book will help you install Exchange 2016 and give you an in-depth understanding of how to configure its server end-to-end to ensure its fully operational. You will then go through the client connectivity protocols by configuring each one of them. Later you will learn how to view, create, and configure Databases and Database Availability Groups. Next, you will perform migrations of Unified Messaging and also mailbox migrations in different ways in Exchange 2016. Lastly, you will work with the new commands of Exchange Management Shell and Exchange Admin Center. Towards the end, you will go through the common issues in Exchange 2016 and learn how to fix them. What will you learnLearn how to configure all the Client connectivity protocols.View, Create and Configure Database and Database Availability Group.Create Public folders and Migrating Public folders from earlier versions of Microsoft Exchange.Understand the working of Exchange Management Shell and Exchange Admin Center.Troubleshoot some common issues in Exchange 2016.Who this book is for This book is for anyone interested in or using Microsoft Exchange 2016. It is also for professionals who have been using Microsoft Exchange 2013 and would like to get familiar with the new features of Exchange 2016. Table of Contents 1. Introduction to Exchange 20162. Installation of Exchange 20163. Post Configuration4. Post Configuration Continued5. Client Connectivity6. Databases and Database Availability Groups7. Public Folders8. Unified Messaging9. Migrations10. Exchange Management Shell vs. EAC11. Troubleshooting common issuesAbout the Author Edward van Biljon is an Experienced Messaging Specialist with a demonstrated history of working in the information technology and services industry. He is a four-time Office Apps & Services MVP with 18 years of experience in Exchange.Edward is also a Microsoft Certified Trainer and spends a lot of time teaching Exchange and other technologies like Azure and Office 365.Edward is a passionate blogger and creates videos and articles on how to do things in Exchange or how to fix a problem in Exchange. You can also find him on the TechNet Forums, assisting people that require help with their Exchange environment.Your Blog links: https: //collaborationpro.com https: //everything-powershell.comYour LinkedIn Profile: https: //www.linkedin.com/in/edward-van-biljon-75946840

Social Robotics

This book constitutes the refereed proceedings of the 11th International Conference on Social Robotics, ICSR 2019, held in Madrid, Spain, in November 2019.The 69 full papers presented were carefully reviewed and selected from 92 submissions. The theme of the 2018 conference is: Friendly Robotics.The papers focus on the following topics: perceptions and expectations of social robots; cognition and social values for social robots; verbal interaction with social robots; social cues and design of social robots; emotional and expressive interaction with social robots; collaborative SR and SR at the workplace; game approaches and applications to HRI; applications in health domain; robots at home and at public spaces; robots in education; technical innovations in social robotics; and privacy and safety of the social robots.

Graph Transformation

This book constitutes the refereed proceedings of the 13th International Conference on Graph Transformation, ICGT 2020, in Bergen, Norway, in June 2020.*The 16 research papers and 4 tool paper presented in this book were carefully reviewed and selected from 40 submissions. One invited paper is also included. The papers deal with the following topics: theoretical advances; application domains; and tool presentations.*The conference was held virtually due to the COVID-19 pandemic.